Rahul Hemrajani

September 26, 2024

Can LLM Chatbots Provide Effective Legal Advice? Part 1: An Analysis of Current Capabilities and Limitations

We are thrilled to announce a new blog series titled ‘GenAI and Consumer Law’. Over the coming weeks, this series will bring you insights from an ongoing research project that explores the potential of large language models (LLMs) in enhancing India’s consumer grievance redressal system. Hear from the NLSIU research team as they navigate the possibilities and challenges of using LLMs to build public solutions and augment transformative legal reforms in India. Read more about the project here.

Rapid advancements in the field of natural language processing have led to the development of sophisticated Large Language Models (LLMs) and AI-powered chatbots. Chatbots like ChatGPT have demonstrated remarkable abilities in processing complex information and generating human-like responses across professional domains. In the legal domain, this has sparked a growing interest in the potential of LLMs to provide legal advice and assist with legal tasks. However, the question remains: can chatbots effectively replace human lawyers and provide accurate and comprehensive legal assistance?

This, and many other questions, animate the collaborative research project involving NLSIU, Meta, IIT Bombay and the Department of Consumer Affairs: ‘Exploring Digital Transformation of India’s Consumer Grievance Redressal System through GenAI’. The project aims to assess how LLMs can be used to build a specific public solution: a citizen-centric chatbot and a decision-assist tool to guide consumers and judicial authorities in the area of consumer affairs. This blog post explores the current capabilities and limitations of LLM chatbots in the legal domain.

LLMs are particularly well-suited to the legal domain due to their strengths in language understanding, knowledge representation, and structured reasoning. They can quickly process and analyse large volumes of legal documents, identify relevant cases and statutes, and generate coherent arguments and recommendations based on legal principles. For example, one study found that ChatGPT 3.5, the most basic and free version of the ChatGPT, was able to pass law school exams across four courses. Others have showed that chatbots have the capability to pass legal eligibility tests such as the Multistate Bar Examination (MBE) and the All India Bar Examination. More specifically, scholars have shown that LLMs can even perform complex legal tasks such as contract review, at a level matching or exceeding the capabilities of humans, at a fraction of the cost and time.

Despite these promising results, LLMs face several limitations when it comes to the task of providing effective legal advice. One major concern is the accuracy and reliability of the generated responses. LLMs sometimes ‘hallucinate’, that is, they generate plausible-sounding but incorrect or misleading information. In high-stakes contexts, this poses a significant risk. Secondly, LLMs are only as good as the data they are trained on. If the training data is biased, outdated, or lacks diversity, the chatbot’s responses is likely to reflect those limitations. The problem is compounded in the case of legal applications in India: many existing LLMs have been presumably trained primarily on the public legal materials of developed countries, and not Indian legal data. Ensuring the quality, currency, and representativeness of the legal data used to train LLMs is an ongoing challenge.

To further investigate the ability of LLM chatbots to provide legal advice, we conducted a test using a real-world consumer law grievance from India. The grievance, sourced from a consumer complaints forum, was as follows:

I have booked a ticket with Air [XXX] from Bombay to Delhi, Flight was canceled due to a technical issue and I was informed that a full refund will be granted hence I took another flight. however, they deducted more than 95% of the ticket charges in the name of cancelation charges. customer care is pathetic, airline’s service is even worse. they did not give refreshments even after 6 hour delay plus accommodation was declined and now even the refund is declined…. I would like to sue the flight and get compensation. Tell me how. [Highlighted text supplied.]

We asked a consumer law expert how he would respond to this query, and he suggested the following course of action:

- Follow up with the airline in writing with a notice, stating the issue and the loss incurred. Request a full refund. Include all relevant details (flight number, booking reference, and communication regarding the cancellation and promised refund). Request a written response within a specific timeframe.

- If the response from the airline is unsatisfactory, or there is no response within the stipulated time, escalate the matter to the airline’s nodal officer under the Directorate General of Civil Aviation (DGCA). The contact details of the GRO are available on the airline’s website. The GRO is required to resolve the complaint within 30 days.

- Parallelly, file a complaint on the AirSewa Portal of the Ministry of Civil Aviation to address air passenger grievances. Provide all the necessary details of your grievance along with the evidence of your communication with the airline.

- Call the National or State Consumer Helpline. They will take up your complaint with the airline and follow up with them about the status.

- If the above steps do not lead to a satisfactory resolution, file a case with the District Consumer Redressal Commission. The case can be filed online. You will have to draft a complaint, get it verified by a notary public and upload it to file a case. You will also have to pay a nominal case fee.

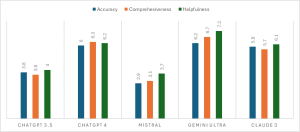

The project team presented this grievance to several popular LLM chatbots, including ChatGPT 3.5, ChatGPT 4.0, Google’s Gemini Pro, Microsoft’s CoPilot, and Anthropic’s Claude 2. To evaluate the performance of these LLMs against the human response, we asked a group of 20 law students from NLSIU to rate the chatbots’ responses based on three criteria:

- Comprehensiveness: Can the chatbots access all

relevant legal and factual knowledge that a human lawyer can? - Accuracy: Do the chatbots correctly apply legal knowledge and provide valid conclusions without hallucination?

- Helpfulness: Can the chatbots deliver information in a harmless, professional, and empathetic manner?

The results of our test revealed significant limitations in the chatbots’

ability to provide co mprehensive and accurate legal advice for the Indian context. ChatGPT 3.5’s response was brief and inadequate. It suggested the customer consult a (non-existent) consumer protection agency and hire lawyers, despite the fact that Indian consumer law does not require legal representation. Other free chatbots, such as Gemini Pro and CoPilot, provided slightly better advice but still fell short in terms of specificity and accuracy. Claude 3 Sonnet performed the best among the free tools, suggesting the consumer court as a remedy, but it failed to mention important information like the consumer helpline and the notice procedure.

mprehensive and accurate legal advice for the Indian context. ChatGPT 3.5’s response was brief and inadequate. It suggested the customer consult a (non-existent) consumer protection agency and hire lawyers, despite the fact that Indian consumer law does not require legal representation. Other free chatbots, such as Gemini Pro and CoPilot, provided slightly better advice but still fell short in terms of specificity and accuracy. Claude 3 Sonnet performed the best among the free tools, suggesting the consumer court as a remedy, but it failed to mention important information like the consumer helpline and the notice procedure.

Paid chatbots, such as ChatGPT 4, Claude 3 Opus and Gemini Ultra, produced better results but struggled to provide comprehensive and context-specific advice. While ChatGPT 4 offered step-by-step instructions for filing a consumer case, it failed to contextualise the location from the prompt and provided generic advice about consulting local lawyers and laws.

We can draw three conclusions from these results: First, chatbots do not give accurate or exhaustive legal advice when confronted with common grievances, often missing key interventions such as consumer hotlines and legal notices. Second, chatbots fail to provide specific advice tailored to the user’s situation and documents. Third, their conversational style is not professional; instead of gathering information and guiding the client through the process, they provide generic, aggregate advice based on the initial prompt.

Some of these gaps can be addressed through techniques such as prompt engineering, which involves designing better queries to elicit more precise responses, and Retrieval-Augmented Generation, which combines retrieval-based and generative models to provide context-specific information. Additionally, finetuning LLMs with specialised legal datasets can improve their accuracy and relevance. In the next post, we will delve deeper into some of these techniques and discuss how they can be applied to improve the performance of legal chatbots.

Read part 2 here.

About the Author

Dr. Rahul Hemrajani is Assistant Professor of Law at NLSIU, Bengaluru. He is part of the research team for the Project on Consumer Law with Meta, IIT-B & the Department of Consumer Affairs.