Rahul Hemrajani

November 12, 2024

Can Large Language Model Chatbots Provide Effective Legal Advice? Part 2: Using Prompt Engineering to Tailor Chatbots for Legal Applications

This is the second post in the GenAI and Consumer Law series. You can read the first post here. This series brings you insights from an ongoing research project that explores the potential of large language models (LLMs) in enhancing India’s consumer grievance redressal system. Hear from the NLSIU research team as they navigate the possibilities and challenges of using LLMs to build public solutions and augment transformative legal reforms in India. Read more about the project here.

The emergence of large language model (LLM) chatbots has opened up exciting possibilities for improving access to legal information and assistance. In our previous post, we used a test case to demonstrate some of the limitations of LLM chatbots in terms of accuracy, nuance and contextual understanding. We also emphasised the need for strategies to elicit more precise and relevant responses from LLM chatbots in the context of legal advice.

Some of the techniques to enhance the performance of existing chatbots in generating legal advice include prompt engineering, Retrieval-Augmented Generation (RAG) and fine-tuning. In this post, we focus on the first of these. Prompt engineering refers to the careful designing of prompts and instructions to guide the LLM to generate more accurate, relevant and contextually appropriate responses.

Prompt Engineering

One of the key issues identified in our test case was the lack of specificity in the consumer’s query. The original grievance simply asked the chatbot for help, without providing clear guidelines on the desired information, such as the expected length, tone, and level of detail. Moreover, the query did not specify whether the consumer was seeking substantive legal advice (e.g., which laws were violated by the airline) or procedural guidance (e.g., steps to obtain relief).

Lack of specificity in a prompt can lead to generic, irrelevant, or incomplete responses from chatbots. This issue can be addressed by prompt engineering—the process of designing and structuring input queries to guide chatbots to generate more relevant outputs. By carefully crafting input queries, we can help chatbots focus on the specific details required for accurate legal advice. For instance, prompts can be structured to include key facts, desired outcomes, and specific legal issues, thereby reducing ambiguity and improving the relevance of the responses.

Original prompt: …I would like to sue the flight and get compensation. Tell me how.

Optimised prompt: What remedies can I pursue under the law in India to get my refund? Give me specific information as well as the steps that I would have to take in order to pursue these remedies.

Refining the prompt to include specific instructions led to more comprehensive advice which included relevant legal notices, remedies available through the AirSewa portal, and the process of filing a consumer case. Although the information was still somewhat generic, it represented a marked improvement over the chatbot’s response to the original prompt. Furthermore, when prompted for additional details, ChatGPT 4.0 was able to provide more specific guidance, such as information about the e-Daakhil portal and even a draft complaint template that could serve as a starting point for the consumer’s legal action.

There are many prompt engineering strategies one can use to improve outcomes. Some of the most effective techniques include:

- Role-Playing: Assigning a specific persona or role to the chatbot can help contextualise the query and guide the chatbot’s response. For instance, instructing the chatbot to ‘act as a professional lawyer who is an expert in consumer law’ can lead to more focused and relevant advice.

- One-Shot or Few-Shot Prompting: Providing a single example (one-shot prompting) or multiple examples (few-shot prompting) of the desired output can help the chatbot understand the expected pattern or style of the response.

- Chain-of-Thought Prompting: Breaking down complex tasks into smaller sequential steps can make the reasoning process more transparent for the chatbot. This approach is particularly useful for legal problem-solving, where step-by-step guidance is often necessary.

Not all of these strategies will work effectively for all problems, and often you will need a combination of different strategies to get the desired results. For example, legal drafting works best when you give the chatbot a template, and legal summarisation works best with a combination of role-playing and chain-of-thought prompting. There are many guides to good prompting, including Open AI’s Prompt Engineering Guide and Google’s prompt guide. There are also many libraries of effective prompts such as Claude’s prompt library and a Github repository of prompts. Finally, there are meta-prompt tools that can help you build good prompts using natural language, such as Claude’s helper metaprompt and a custom GPT called prompt refiner.

To demonstrate the impact of prompt engineering, we applied two strategies to our consumer law grievance test case.

- Prompt Strategy 1 | Role-playing and Contextualisation: We assigned the chatbot the role of a professional lawyer specialising in consumer law and provided specific instructions on the desired information, such as the legal remedies available and the steps to pursue them. The full prompt is here.

- Prompt Strategy 2 | One-shot prompting: In addition to the information given in Strategy 1, we provided examples of possible remedies in consumer law for other fields.

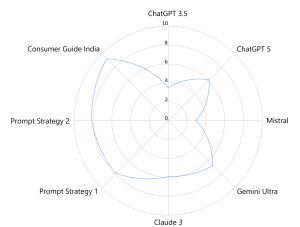

The results were promising. Student evaluators rated the responses generated by ChatGPT under Prompt Strategy 1 an average of 7.89 out of 10, and Prompt Strategy 2 received an average rating of 8.13. These scores were significantly higher than the 6.7 rating given to the chatbot’s response to the original, unoptimised prompt.

The results were promising. Student evaluators rated the responses generated by ChatGPT under Prompt Strategy 1 an average of 7.89 out of 10, and Prompt Strategy 2 received an average rating of 8.13. These scores were significantly higher than the 6.7 rating given to the chatbot’s response to the original, unoptimised prompt.

It is clear that prompts often require users to ask for specific information and provide clear guidelines on the desired form of advice. However, this expectation may not align with the realities of many consumers in India. Given our low literacy rates and limited English proficiency, most consumers may struggle to frame their queries in a precise and structured manner. They are more likely to pose their questions in the same way they would on consumer forums, which often lack the specificity and clarity needed to elicit the most accurate and relevant legal advice from the chatbots. Moreover, crafting effective prompts often requires a certain level of technical skill and creativity, which may be beyond the capabilities of the average consumer. Framing a precise prompt that captures all the necessary details and nuances of a legal issue is a challenging task, and it may be unrealistic to expect consumers to possess the expertise needed to do so effectively.

One potential solution to address the limitations of user-generated prompts is to incorporate pre-defined system prompts into the chatbot’s framework. By hardcoding specific instructions and guidelines into the chatbot’s initial prompt, we can ensure that the chatbot automatically receives the necessary context and direction to provide accurate and relevant legal advice, even if the user’s query is not optimally structured. Here is an example of such a system prompt that could be added to the chatbot.

You are a consumer law help bot who helps people with the redressal of consumer law grievances in India. When a consumer tells you about a grievance, you must provide them with the remedies they can pursue under the law in India to get relief. Also give specific information as well as the steps that they would have to take in order to pursue these remedies. In case of consumer grievances, always first suggest the National Consumer Helpline (1800-11-4000). For filing a consumer case, tell the consumer about the e-Daakhil portal.

With a system prompt like this in place, the chatbot would be primed to provide specific advice about the consumer helpline and the e-Daakhil portal even if the consumer does not explicitly ask for this information. This approach could help bridge the gap between the ideal prompt structure and queries posed by consumers without the technical expertise to craft optimal prompts.

System prompts have their own limitations. It is not feasible to account for every possible sector, grievance, or legal scenario through pre-defined prompts. In the above example, the chatbot may not provide information about AirSewa as it is not specifically mentioned in the system prompt. Given the limited context window of most LLMs, striking the right balance between specificity and comprehensiveness in system prompts is a challenging task. It also requires continuous refinement and updates as new legal issues and consumer needs emerge. These objectives may be served through other methods of altering the interaction of the LLM with the training data, which we will explore in our next post.

About the Author

Dr. Rahul Hemrajani is Assistant Professor of Law at NLSIU, Bengaluru. He is part of the research team for the Project on Consumer Law with Meta, IIT-B & the Department of Consumer Affairs.